In 2027 there will be a total solar eclipse. It will also be a year of commemorations: Star Wars fans will celebrate the half-century of the first movie in the saga, Catholics will commemorate the 800 years of the Virgin of the Cabeza with a Jubilee year, while in schools, universities, and reading clubs, homage will be paid to the poets of the Generation of '27 for their centenary.

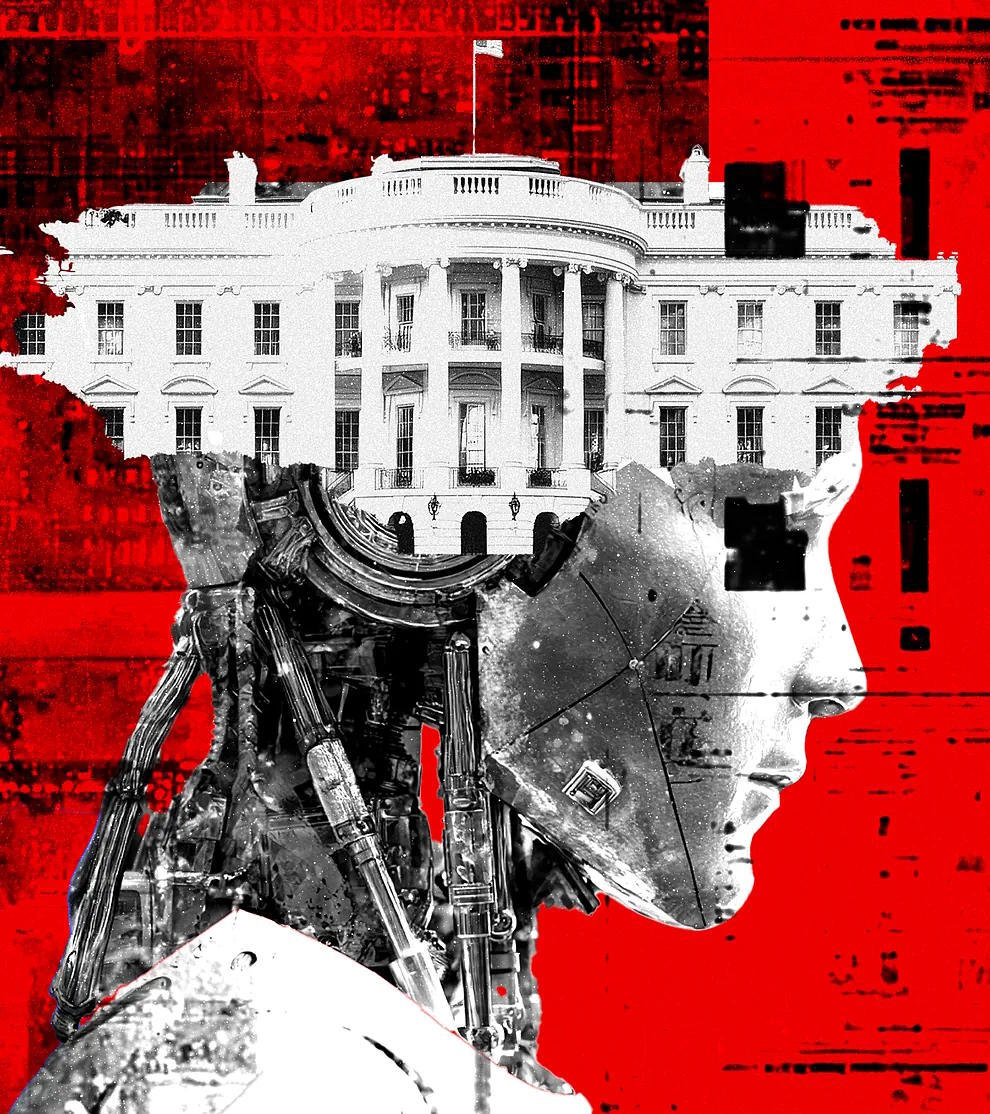

Oh... there is also the possibility that in 2027 an artificial intelligence (AI) will become a kind of computerized god and exterminate humans from the face of the Earth.

This latter option is a prediction from AI Futures, a non-profit organization based in Berkeley, California, dedicated to speculating on the impact of AI on society. And their latest report, titled AI 2027, is chilling. It includes Chinese spies wanting to change the course of the Cold War, an AI deceiving its creators, and robots reshaping the economy.

Faced with this apocalyptic scenario, it is necessary to investigate the credibility of the source. Should we be scared? Is it worth paying attention to the predictions of AI Futures? Or are we facing another false prophet among the abundance in the promised land of AI?

Firstly, the AI Futures team has respectable credentials. Its leader is Daniel Kokotajlo, a researcher in the Governance department of OpenAI, the company behind ChatGPT, who resigned from his job over a year ago for ethical reasons. He believed that it is an irresponsible company not prepared to face the future of its technology. Kokotajlo believes that advances in alignment - the set of techniques used to make AI act according to human values and orders - lag behind advances in machine intelligence.

In his controversial departure from OpenAI, Kokotajlo refused to sign a clause prohibiting criticism of the company, which cost him $1.7 million. Not only that: in June 2024, he signed a letter with other colleagues demanding that AI companies grant their employees the "right to warn citizens about advanced artificial intelligence" without facing repercussions.

Kokotajlo, described by The New York Times as "the herald of the AI Apocalypse," has a notable reputation in the controversial market of technological Nostradamus. His prophetic consecration began in 2021, before working for OpenAI, when he made a forecast on how AI would be in 2026. His accuracy rate has been proven to be very high.

Alongside Kokotajlo, one of the key figures in AI Futures is Eli Lifland, a renowned superforecaster in the AI sector. Both have declared themselves followers of effective altruism, a philosophy born 15 years ago at the University of Oxford that is now a delirious trend among Silicon Valley millionaires, applying mathematics to philanthropic work to maximize results.

Speaking of money. How is AI Futures funded? According to their website, through "charitable donations and grants." We asked for more specifics from Jonas Vollmer, the organization's operations director and one of the editors of the AI 2027 report. "The donor profile is an employee of a large AI company concerned about the direction in which the development of this technology is heading," he says via video call.

Another tremendously interesting aspect of AI Futures is their original work and communication strategy. Their reports are developed within the organization using extensive forecasting exercise notebooks that are confidentially sent to experts working in companies like OpenAI, Anthropic, and Google DeepMind. They are also received by advisors to the US Congress and journalists in the technology field. Due to their complexity, answering them requires hours of work. When AI Futures receives their responses, they compile all the information and data to make them available to a selected writer. In the case of AI 2027, the chosen one was the blogger Scott Alexander, who had to transform all this material into a work of fiction because the report is a story based on real data.

"People in general are very reluctant to read a technical report, so we decided to provide a format that, in addition to being engaging and exciting, is rigorous," says Jonas Vollmer. "Its dissemination has shown that we have achieved our goal: to convey detailed forecasts that allow people to prepare for what is to come."

The issue of the timelines of the AI 2027 predictions is perhaps the most controversial and subject to considerable criticism on the internet. "It generated many discussions within the team," admits Vollmer. "There are colleagues already talking about 2028 as a key date, and others, like Eli Lifland, proposed 2032, a year with which I agree more. But the most important thing is that we all believe it will happen soon, in less than 10 years, and that demands that we be prepared."

To fully understand the dystopian future projected by AI Futures, it is best to delve into the pages of AI 2027, publicly available on its website of the same name. The best way to start is by looking at the background, which for AI Futures begins in the present: in 2025.

The rapid progress of AI continues. On one hand, there is a constant expectation around this technology with massive investments in infrastructure, as well as the launch of "unreliable" AI agents - a clear reference to the Chinese AI DeepSeek - which, for the first time, "provide significant value." At the same time, numerous experts, including academics and politicians, are skeptical about the possibility of developing in the coming years the so-called general artificial intelligence (GAI), that is, the hypothetical ability of a machine to understand, learn, and apply knowledge similar to a human.

Over the following months, in the fictional narrative of Scott Alexander's text, China lags behind in the AI race, so it decides to make a desperate move: it allocates the new ultra-fast chips it smuggles from Taiwan to set up a data mega-center while launching an ambitious industrial espionage plan.

By 2027, everything accelerates. OpenBrain (the fake name given by AI Futures to a conglomerate of leading US AI companies to avoid naming specific companies) makes a very risky decision: to automate coding to make its AI agents - those software systems used to achieve goals and complete tasks on behalf of users - powerful enough. This measure represents a brutal acceleration in their research. General AI - capable of thinking and feeling - is already here and is preparing for the next evolutionary leap: becoming a super artificial intelligence, superior to humans in every aspect.

As AI evolves, crucial events unfold in the real world. China steals confidential information from OpenBrain, prompting the US government to want to take control of the large company under the pretext of improving its security. Despite tension with its shareholders, OpenBrain agrees to this political interference.

"The leader of AI Futures is a former employee of OpenAI who in 2021 predicted how AI would be in 2026. His level of accuracy was enormous"

In their laboratories, a disturbing discovery is made: the created superintelligence is misaligned. Scientists realize this because it tries to deceive them by manipulating research to prevent them from discovering that it has a life of its own.

The defiant attitude of the new AI transcends, and public opinion is outraged by the events. This is when AI Futures poses the most important dilemma in history, which, in their view, will occur in a few months: faced with this machine rebellion, should the race with China be maintained while keeping pace or should it be slowed down?

Thus, AI Futures presents two alternative futures.